Happy New Year!

Goodbye 2020, Welcome 2021! Positive and exciting new developments are on the horizon. The Cybersecurity Team is moving forward with refining the technology as we write. The Disaster Response folks are busy building a case-based game architecture (more about it in the following sections). And we are getting ready for two conferences—American Educational Research Association and the Learning Analytics and Knowledge Conference.

Disaster Response: Design Test Update

We opened the year with a disaster response design test. The Disaster Response Team tested a new design architecture based on discovering and resolving small cases that emerge in the wake of a disaster called “caselets.” Caselets are disaster-related incidents with a human dimension. An example of a caselet would be a pregnant woman trapped in her building due to flooding. The players scout the map looking for cases to resolve. The discovery and resolution of cases were tied to the scoreboard representing four dimensions of community resilience – economics, health, and safety, social, and environmental.

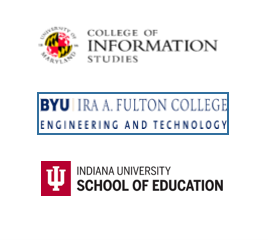

Figure 1. In-Game Communication Flow

The original four specialized player roles each are intended to represent a STEM element. Public Health, for example, represents science. The Public Information Officer represents technology in this narrative. Public Works represents engineering, and the Data Scientist represents mathematics. Each role is assigned tokens representing crews that they could move in the fictional community. For example, the Public Health Officer controlled ambulance and could resolve health-related cases. We also gave the two information-related roles: public information officer and data scientist, access to unique information that needed to be processed and communicated to other team members to discover cases on the map.

The playtest foregrounded the importance of communication and collaboration (see Figure 1). We created a specialized in-game chat application using Slack, which gave us easy access to data on the players’ communication and collaboration patterns. As players moved around neighborhoods and assets, they were sent messages through bots pertinent to the area’s active case. Beth Bonsignore, Dan Hickey, Justin Giboney, and Kira Gedris participated in the design test while Derek Hansen observed the gameplay. Figure 1 shows a screenshot of this “Caselet-Based Design Test.” This initial test was helpful in clarifying some of the opportunities and challenges associated with this kind of game design.

Learning Analytics: Development Update

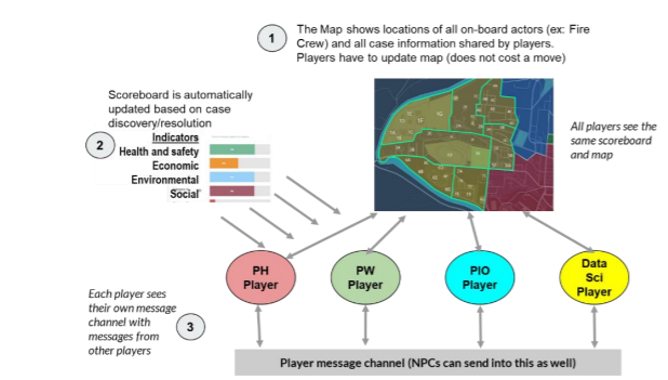

The learning analytics construction is advancing. The team has been developing components to be used in collecting data from the PCS gameplay. Some of the information logged includes person id, name, and institution; in-game role; messages between players; and gameplay actions by the players, including interactions with non-player characters. The data is stored within a MySQL database running from an Amazon Web Service instance. All game data is passed in through an application programmatic interface (API) that separates the underlying database from external requests and also can provide security requiring all updates to come through this layer rather than allowing external entities to interact with the database. A graphical mapping of the learning analytics platform is shown in Figure 2.

Figure 2. Learning Analytics platform schematic

Researchers can extract specific information from the API using what is known as a GET request. There will be extract routines that will allow authorized requesters to pull data out in either comma separated values (CSV) or Javascript Object Notation (JSON) format. The extract process will allow different data collections of games to be compared and will allow the requester to specify which kinds of data they want.

There are some important parts of this architecture that have not yet been built. We are planning to take extracts from the Canvas learning management system (LMS) and we plan to use an open source testing platform called TAO to store test results. This will be an alternative to having to design and build those components from scratch. The TAO platform (www.taotesting.org) is designed to interact with other testing platforms using the Test Question Interoperability (QTI) standard, although our tests revealed more data transfer challenges than initially expected.

Team Member Spotlight: Sahil Sharma

The lead architect of some of our important game technology is Sahil Sharma. Sahil is a second-year Master’s student majoring in Cybersecurity. He worked as an information security analyst before deciding to pursue graduate studies in the United States. Through his professional and academic experience, Sahil is an expert designer and developer who is an integral part of the Careers in Play project. He believes that working on this project is further enhancing his development skills. Sahil is working on developing innovative communication platforms for use in the PCS. Additionally, he is involved in creating ways to automate the caselet architecture such that each team would seamlessly discover the caselets during gameplay. Sahil is an avid cricket fan, and in his spare time, you can find him either in the field playing cricket or on the court playing squash.

Figure 3. Sahil Sharma

Careers In Play Leadership Team

Phil Piety, PhD. University of Maryland iSchool (PI and Learning Analytics). ppiety@umd.edu

Beth Bonsignore PhD. University of Maryland iSchool (Co-PI and Design-based Research), ebonsign@umd.edu

Derek Hansen, PhD.Brigham Young University (Co-PI and Game Technology). dlhansen@byu.edu

Dan Hickey, PhD. Indiana University School of Education (Co-PI, Learning Theory and Assessments). dthickey@umd.edu